Overview of the internet

There are several different types of devices that make up the internet.

- Hosts - Devices/end systems that are at the edge of the network.

- Packet switches - Routers/switches that forward packets to other devices.

- Communication links - Fibre/copper/radio transmission links between devices.

- Networks - A collection of devices, routers and links managed by an organisation. These can be:

- Mobile networks.

- Home networks.

- Enterprise networks.

- Local ISPs

- National ISPs

- Content provider networks

- Datacenter networks

The internet is a network of networks that are generally enabled by interconnected ISPs.

Protocols

Control the sending and receiving of messages. There are many different types for different purposes.

Internet Standards

There are certain organisations that maintain open protocols for the internet:

- RFC - Request for comments.

- IETF - Internet engineering task forces.

Services

Infrastructure provides services to applications. These services provide a programming interface to distributed applications:

- Hooks - Allow sending receiving app to use the internet as a transport service.

- APIs provide service options analogous to the postal service.

Protocol Overview

Define the format, order of messages sent and recieved among network entities, and action taken on message transmission, and receipt.

To find the time the following sequence of actions might happen:

sequenceDiagram

me->>you: Hi

you->>me: Hello

me->>you: Got the time?

you->>me: 2:00 pm

The network edge is made up of the following devices:

- Hosts - Clients and servers.

- Servers - Often in data centres.

The hosts are connected to the internet via access networks through physical media:

- Wired links

- Wireless links.

The hosts are then connected to the network core which are a set of interconnected routers.

To connect a host to an edge router you would use:

- Residential networks.

- Institutional/enterprise networks.

- Mobile networks.

These connections have the properties:

- Bandwidth/transmission rate.

- Shared or dedicated access.

Cable Internet

This is a single coaxial cable that uses frequency division multiplexing FDM to split the frequency bands for different purposes and users.

graph LR

Host --- cm[Cable Modem] --- Splitter --- od[Other Households] --- CMTS --- ISP

subgraph Cable Headend

CMTS

end

subgraph House

Host

cm

TV

Splitter

end

TV --- Splitter

- CMTS - Cable modem termination system.

- HFC - Hybrid fibre coax

- An asymmetric connection with 40 Mbps - 1.2 Gbps downstream, 30 - 100 Mbps upstream.

Homes share network access to the cable hardened.

Digital Subscriber Line (DSL)

Uses the existing telephone line to central office DSLAM:

- Data over DSL phone line goes to the internet.

- Voice over DSL phone line goes to the telephone network.

graph LR

Host --- cm[DSL Modem] --- Splitter --- DSLAM --- ISP

subgraph Central Office

DSLAM --- tn[Telephone Network]

end

subgraph House

Host

cm

Phone

Splitter

end

Phone --- Splitter

- DSLAM - DSL access multiplexer.

- Voice and data are transmitted at different frequencies over a dedicated line.

- Often asymmetric connection with 24 - 52 Mbps downstream, 3.5 - 16 Mbps upstream.

Home Networks

Often have the following:

- Cable/DSL modem

- Router, firewall, NAT

- Wired Ethernet

- WiFi AP

Several of these functions are often combined into the modem from the provider.

Wireless Networks

A shared wireless access network that connects a host to a router.

- WLANS - Wireless local area networks:

- Typically ~30m

- Often use WiFi IEEE 802.11b/g/n

- Wide area cellular access networks:

- Provide by mobile operators ~10s km

- 10s Mbps

- 3G/4G/5G

Enterprise Networks

Similar home networks but often with separate components and additional hosts.

Sending Packets

For a host to send data on a network it:

- Takes application message.

- Breaks it into smaller chunks, known as packets, of length $L$ bits.

- Transmits packet into access network at a transmission rate $R$.

- $R$ is the link transmission rate or bandwidth.

\[\frac{L\text{ (bits)}}{R\text{ (bits/sec)}}=\text{Packet Transmission Delay}\]

| Term |

Definition |

| Bit |

Propagates between transmitter/ receiver. |

| Physical link |

What lies between the transmitter and receiver. |

| Guided Media |

Signal propagate in solid media: copper, fibre. |

| Unguided Media |

Signals propagate freely: radio. |

Twisted Pair (TP)

Two twisted insulated copper wires. This is the basis of the cable used for Ethernet.

- Cat 5 - 100Mbps to 1Gbps

- Cat 6 - 1 to 10Gbps

Coaxial Cable

Two concentric copper conductors. Multiple frequency channels are put on a cable to gain additional bandwidth.

Fibre Optic cable

Glass fibre carrying light pulses, each pulse a bit.

- High speed operation ~10-100s Gbps

- Low error rate as it is immune to electromagnetic interference.

The signal is carried in the electromagnetic spectrum. There is no physical wire. There are propagation effects from the environment:

- Reflection

- Obstruction

- Interference

There are several types of radio link types:

- Terrestrial microwave:

- WiFi

- Wide-Area

- Satellite

- Up to 45 Mbps per channel.

- 270 ms end to end delay.

- Can gain lower latency by using low orbit vs geosynchronous satellites.

The network core is a mesh of interconnected routers. They use packet-switching to break the application-layer messages into packets:

- Forward packets from one router to the next, across links on path from the source to the destination.

- Each packet is transmitted at full link capacity.

Store and Forward

The entire packet must arrive a a router before it can be transmitted on to the next link.

Including the transmission delay ($\frac L R$) the end-end delay is:

\[\text{E2E}=\frac{2L} R\]

this assumes zero propagation delay.

Queuing Delay & Loss

If the arrival rate exceeds the transmission rate of a link then:

- Packets will queue, waiting to be transmitted on the output link.

- Packets can be dropped if the memory buffer in the router fills up.

Network Core Functions

These are the core functions from the perspective of a router.

Forwarding

This is a local action that moves the arriving packets from the router’s input link to the appropriate router output link.

Routing

This is a global action that determines the source-destination paths taken by packets.

Routing algorithms are used to do this.

Circuit Switching

This is an alternative to packet switching. End-end resources are allocated to be reserved for a call between the source and destination.

- Dedicated resources

- There is no sharing and you are guaranteed performance.

- Circuit segment is idle if not used by a call.

- Commonly used in traditional telephone networks.

Packet switching is better suited to the internet as communication is generally is bursts with lots of idle time.

Frequency Division Multiplexing (FDM)

By using a modulation technique, you can split the bandwidth by frequency and transfer data on a circuit at many different frequencies.

Time Division Multiplexing (TDM)

This method splits the entire bandwidth into time slots that can be assigned to certain users.

Circuit Switching Evaluation

- Good for bursty data as we can take advantage of:

- Resource sharing.

- No call setup.

- Excessive congestion is possible:

- Packet delay and loss due to buffer overflow.

- Protocols need to reliable data transfer, congestion control.

- Bandwidth guarantees are still required for video and audio streaming.

Internet Structure

In order to connect all the international ISPs together we use global ISPs. These global ISPs are then joined by internet exchange points (IXPs). Additionally content provider networks may run their own networks to bring their content closer to edge nodes.

flowchart TD

t11[Tier 1 ISP 1]

t12[Tier 1 ISP 2]

cdn[Content Provider]

ixp1[IXP]

ixp2[IXP]

ixp3[IXP]

r1[Regional ISP]

r2[Regional ISP]

a1[Access ISP]

a2[Access ISP]

a3[Access ISP]

a4[Access ISP]

a5[Access ISP]

a6[Access ISP]

a7[Access ISP]

a8[Access ISP]

a9[Access ISP]

t11 --- t12

t11 --- ixp1 & r1

t12 --- cdn

t12 --- ixp2 & ixp3 & r2

cdn --- ixp1 & ixp2 & ixp3

ixp1 --- r1 & a1

ixp2 --- r1 & r2

ixp3 --- r2

r1 --- a1 & a2 & a3 & a4

r2 --- a5 & a6 & a7 & a8 & a9

- Tier 1 - Commercial ISPs

- Have national and international coverage.

- Content Provider Networks - Private networks

- Connects it data centres to the internet, often bypassing tier 1 and regional ISPs.

- IXPs - Internet Exchange Points

- Connect ISPs to one-another.

- Access ISPs

- The people you pay for internet service.

Delay

There are several types of delay that a packet can experience:

- Nodal Processing ($d_\text{proc}$)

- Checking for bit errors.

- Determining the output link.

- Generally < 1 ms

- Queuing Delay

- Time waiting at output link for transmission.

- Depends on the congestion level of the router.

-

Transmission Delay

\[d_\text{trans}=\frac L R\]

- $L$ - Packet length in bits.

- $R$ - Link transmission rate in bps.

-

Propagation Delay

\[d_\text{prop}=\frac d s\]

- $d$ - The length of the physical link.

- $s$ - The propagation speed on the physical media ($~2\times 10^8$ ms).

This comes together to give the total packet delay of:

\[d_\text{nodal} = d_\text{proc} +d_\text{queue} +d_\text{trans} +d_{prop}\]

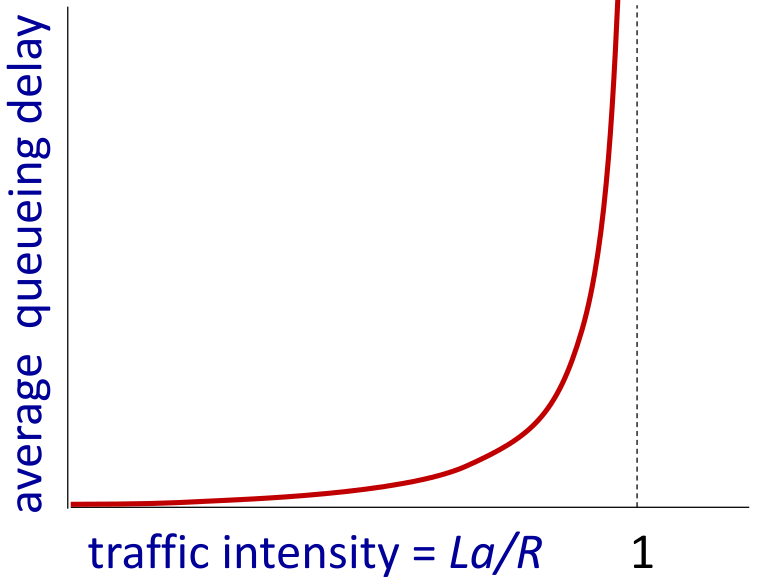

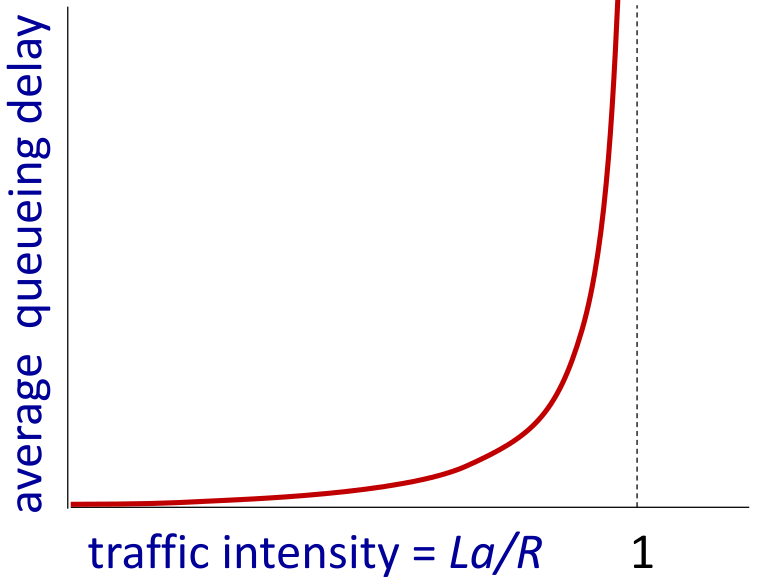

Traffic Intensity

This is calculated using the following equation:

\[\text{Traffic Intensity}=\frac{La} r\]

- $R$ - Link bandwidth in bps.

- $L$ - Packet length in bits.

- $a$ - Average packet arrival rate.

Queuing delay increases exponentially with the traffic intensity.

For small values then the delay is small but if the delay is >1 then packets are dropped.

traceroute

This is a program that can measure the delay to all the ‘hops’ on a packet’s journey.

Throughput

Throughput is the rate at which bits are being sent from sender to receiver. This can be measured as:

- Instantaneous - Rate at a given point in time.

- Average - Rate over a longer period of time.

The throughput is limited by the slowest link on a packet’s path.

The internet was not originally designed with security in mind. This means that current methods have been patched onto the existing layers of the internet.

Denial of Service

This method of network abuse uses several computers to send traffic to a server in order to overwhelm it.

Packet Sniffing

On broadcast media, like Ethernet and WiFi, you are able to record the transmitted packets for later analysis.

These packets include all transmitted data so sensitive information, like cookies and passwords, can be captured on encrypted sites.

IP Spoofing

Packets can be sent with a false source address. This can complete actions on behalf of an already authenticated machine.

1961-1972 - Early Packet-switching Principles

- 1961 - Kleinrock - Queuing theory shows effectiveness of packet switching.

- 1964 - Baran - Packet-switching in military nets.

- 1967 - ARPAnet conceived by Advanced Research Projects Agency.

- 1969 - First ARPAnet node operational.

- 1972 - ARPAnet public demo.

- NCP - Network control protocol is the first host-host protocol.

- First e-mail program.

- ARPAnet has 15 nodes.

1972-1980 - Internet Working, New & Proprietary Nets

- 1970 - ALOHAnet satellite network in Hawaii.

- 1974 - Cerf & Kahn - Architectures for interconnecting networks.

- Minimalism, autonomy - no internal changes required to interconnect networks.

- Best effort service model.

- Stateless routing.

- Decentralised control

- 1976 - Ethernet at Xerox PARC.

- Late 70s - Proprietary architectures: DECnet, SNA, XNA.

- 1979 - ARPAnet has 200 nodes.

1980-1990 - New Protocols, A Proliferation of Networks

- 1983 - Deployment of TCP/IP

- 1982 - SMTP e-mail protocol defines.

- 1983 - DNS defines for name-to-IP-address translation.

- 1985 - FTP protocol defined.

- 1988 - TCP congestion control.

The french government gives free network access devices to access the Minitel network.

1990-2000 - Commercialisation, The Web, New Applications

- Early 1990s - ARPAnet decommissioned.

- 1991 - NSF lifts restrictions on commercial use of NSFnet (decommissioned 1995).

- Early 1990s - The Web

- Hypertext (Bush - 1945, Nelson - 1960s)

- HTML, HTTP (Berners-Lee)

- 1994 - Mosaic, later Netscape.

- Late 1990s - Commercialisation of the web.

- Late 1990s-200s:

- More killer apps - Instant messaging, P2P file sharing.

- Network security to forefront.

- 50 millions hosts, 100 million users.

- Backbone links running at Gbps

2005-Present - More applications, greater proliferation.

- 18 billion connected device.

- Aggressive deployment of broadband.

- Online social networks.

- Service providers (Google, FB…) create their own networks.

- Enterprises run their services in the cloud.

Defining protocol layers helps in dealing with complex systems:

- Explicit structure allows identification, relationship of complex system’s pieces.

- Layered reference model for discussion.

- Modularisation eases maintenance, updating of system.

- Change in layer’s service implementation is transparent to rest of system.

Internet Protocol Stack

ISO/OSI Reference Model

The OSI reference model also includes:

- Presentation - Allows the applications to interpret the meaning of data.

- Encryption, compression, machine specific conventions.

- Session - Synchronisation, check-pointing and recovery of data exchange.

The internet stack is missing these layers. If they are need they must be implemented in the application.

| Application |

| Presentation |

| Session |

| Transport |

| Network |

| Data Link |

| Physical |

HTTP

There are two types of HTTP messages:

HTTP messages are encoded in ASCII. Here is one now:

GET / HTTP/1.1

Host: comp211.gairing.com

User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:92.0) Gecko/20100101 Firefox/92.0

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8

Accept-Language: en-GB

Accept-Encoding: gzip, deflate

DNT: 1

Connection: keep-alive

Upgrade-Insecure-Requests: 1

Only the GET and Host: lines are required for a valid request.

- The top line is the request line. You can make requests such as

GET, POST and HEAD.

- The rest of the lines are called the header lines. They specify certain parameters that the browser can set.

All lines end with a \r\n to indicate a carriage return and line feed. There is also an extra one at the end of the header lines.

<method> <URL> <version>\r\n # request line

<header field name> <value>\r\n # header lines

...

\r\n

<entity body> # the requested body

Other HTTP Request Messages

POST method:

- Web page often includes form input.

- User input sent from client to server in entity body of HTTP

POST request message.

GET method (for sending data to server):

HEAD method:

- Requests headers (only) that would be returned if specified URL were requested with an HTTP

GET method.

PUT method:

HTTP Response

This is the response that the server sends back to a client:

HTTP/1.1 200 OK

Content-Type: text/html

Content-Length: 417

Connection: keep-alive

Keep-Alive: timeout=15

Date: Mon, 04 Oct 2021 11:40:11 GMT

Server: Apache

Last-Modified: Thu, 03 Oct 2019 20:28:35 GMT

ETag: "1a1-594076e0ed808"

Accept-Ranges: bytes

<HTML>

<HEAD>

<TITLE>This is just a simple HTML page</TITLE>

</HEAD>

<BODY BGCOLOR="FFFFFF">

<HR>

<H1>This is a Header</H1>

<H2>This is a Medium Header</H2>

Send me mail at <a href="mailto:support@yourcompany.com">

support@yourcompany.com</a>.

<P> This is a new paragraph!

<P> <B>This is a new paragraph!</B>

<BR> <B><I>This is a new sentence without a paragraph break, in bold

italics.</I></B>

<HR>

</BODY>

</HTML>

- The top line is the status line.

- Next are header lines.

- Then the data requested.

HTTP Response Status Codes

| Code |

Definition |

200 OK |

Request succeeded, requested object later in this message. |

301 Moved Permanently |

Requested object moved, new location specified later in this message. (In Location: field.) |

400 Bad Request |

Request msg not understood by server. |

404 Not Found |

Requested document not found on this server. |

505 HTTP Version Not Supported |

|

There are three major components to e-mail:

- User Agents

- Such as outlook or geary.

- Mail servers

- Mailbox - contains incoming messages for user.

- Mesage Queue - of outgoing messages.

- SMTP Protocol - Between mail server to send email messages.

- The protocol: simple mail transfer protocol (SMTP)

SMTP

The standard for this protocol is RFC 5321.

- Uses TCP on port 25.

- Direct transfer from the sending to receiving server.

- Three phases of transfer:

- Handshaking

- Transer of Messages

- Closure

- Command/Response Interaction (like HTTP):

- Commands: in ASCII.

- Response: Status code and phrase.

Example SMTP Request

SMTP requests are sent as multiple objects, sent in a multi-part message. The message is split based on who is responding.

220 EventMachine SMTP Server

250 Ok EventMachine SMTP Server

MAIL FROM: <sender@example.com>

RCPT TO: <receiver@example.org>

SUBJECT: Hello

Hi Bob, How's the weather? Alice.

.

The message is ended with \r\n.\r\n. Where \r is a carriage return and \n is a newline.

SMTP is defined in RFC 531 (like HTTP). Additionally, RFC 822 defines the syntax for e-mail messages (like HTML).

An SMTP message can be split into two parts:

- Header Lines:

- Body - Message with ASCII characters only.

Mail Access Protocols

SMTP can send mail but in order to retrieve it and read the contents you must use other protocols:

- SMTP - Delivery and storage of e-mail.

- IMAP (Internet mail access protocol RFC 3501) - Messages stored on server, provides retrieval, deletion & folders of stored messages.

- HTTP - Provides web-based interfaces on top of SMTP & IMAP.

Network Applications

These are programs that:

- Run on different end systems.

- Communicate over the network.

As this is a layered system there is no need to write software for the network-core devices.

Client-Server Architecture

The server is:

- Always on host.

- Permanent IP address.

- Can use data centres for scaling.

The clients:

- Communicate with the server.

- May be intermittently connected.

- May have a dynamic IP address.

- Don’t communicate directly with each other.

Peer-to-Peer (P2P) Architecture

This architectures includes:

- No always on server.

- Arbitrary end system directly communicating.

- Peers request service from other peers and provide service to other peers.

- Self scalability - New load also brings more service capacity.

- Peers are intermittently connected and change IP addresses.

Processes Communicating

A process is a program running within a host:

- Within the same host, two process communicate using inter-process communication (defined by the OS).

- Processes in different hosts communicate by exchanging messages.

Sockets

Processes send and receive messages to and from its socket:

- Sending a message from a socket relies on a transport infrastructure behind the port.

- There should be two sockets involved (one to send and one to receive).

Addressing Processes

In order to identify a process you need the system’s IP address and the port number associated with the process and the host.

Examples are:

- HTTP server: port 80

- SMTP server: port 25

Application-Layer Protocols

The protocol for the application layer must define the following things:

- Types of messages exchanged.

- Message syntax.

- Message semantics

- Rules for when and how processes send & respond to messages.

You can use open protocols:

- Defined in RFC’s

- Everyone has access to the protocol definition.

- Allows for interoperability.

Or proprietary protocols:

- Are made in-house and are not open to the public.

Service Requirements

- Data Integrity

- You may need 100% reliable data transfer for some types of data transfer.

- Media streaming can often compensate for loss.

- Timing

- Some apps require low latency for effective use of the application.

- Throughput

- Media streaming often requires a minimum throughput to be effective.

- Security

Common Applications

| Application |

Data Loss |

Throughput |

Time Sensitive |

| File transfer/dowlad |

No loss |

Elastic |

no |

| E-mail |

No Loss |

Elastic |

no |

| Web documents |

No loss |

Elastic |

no |

| Telephony |

Loss-tolerant |

Audio: 5 Kbps - 1 Mbps, Video 2 Mbps - 8 Mbps |

Yes - 10ms |

| Streaming |

Loss-tolerant |

Same as above |

Yes, few seconds |

| Interactive games |

Loss-tolerant |

Kbps+ |

Yes - 10ms |

| Text messaging |

No loss |

elastic |

Dependant on application |

Internet Transport Protocols

TCP

This method provides:

- Reliable Transport

- Flow Control

- Sender won’t overwhelm the receiver.

- Congestion Control

It doesn’t provide:

- Timing

- Minimum throughput guarantee

- Security

This protocol is connection-oriented as a setup it required between teh client and server processes.

UDP

This method provides:

It doesn’t provide:

- Reliable Transport

- Flow Control

- Congestion Control

- Timing

- Minimum throughput guarantee

- Security

This method has no guarantees. However, this enables the fastest connections and quickest setup times.

Security

TCP and UDP sockets provide no security methods, as a result there are additional protocols that allow this service.

Transport layer security (TLS) provides:

- TCP encrypted connections.

- Data integrity

- End-point authentication.

TLS is implemented in the application layer. This means that:

- Apps use TLS libraries that, in turn, use TCP.

- TLS socket API allows clear-text to be sent to the socket to traverse the network encrypted.

In order to establish a TCP connection and send commands to a server you can use the following skeleton code:

import java.io.*;

import java.net.*;

public class EmailSender

{

public static void main(String[] args) throws Exception

{

// Establish a TCP connection with the mail server.

Socket socket = new Socket("35.246.112.180",1025);

// Create a BufferedReader to read a line at a time.

InputStream is = socket.getInputStream();

InputStreamReader isr = new InputStreamReader(is);

BufferedReader br = new BufferedReader(isr);

// Read greeting from the server.

String response = br.readLine();

System.out.println(response);

if (!response.startsWith("220")) {

throw new Exception("220 reply not received from server.");

}

// Get a reference to the socket's output stream.

DataOutputStream os = new DataOutputStream(socket.getOutputStream());

// Send HELO command and get server response.

String command = "HELO alice\r\n";

System.out.print(command);

os.writeBytes(command);

response = br.readLine();

System.out.println(response);

if (!response.startsWith("250")) {

throw new Exception("250 reply not received from server.");

}

}

}

SMTP

After the header, and before the body, of the email there must be a blank line. This is required for the assignment.

DATA

SUBJECT: Hello

This is the body.

.

Java Sockets

To open a socket use the code:

import java.net.*;

Socket socket = new Socket("ip.address", <port>);

Reading Sockets

To read the socket you can use the following:

import java.io.*

InputStream is = socket.getInputStream();

InputStreamReader isr = new InputStremReader(is);

BufferedReader br = new BufferedReader(isr);

The BufferedReader allows you to read the stream a line at a time.

To read the buffer to a variable you can use the following:

String response = br.readLine();

Writing to Sockets

To send data you must first setup an output stream:

DataOutputStream os = new DataOutputStream(socket.getOutputStream());

To write a variable to the stream you use:

Assignment 1

The instructions for this assignment are available here.

A web page is a set of several referenced objects which are accessible by their URL:

\[\underbrace{\text{www.someschool.edu}}_\text{host name}\text{/}\underbrace{\text{someDept/pic.gif}}_\text{path name}\]

HTTP

This is the application layer protocol for the web:

- It uses a client server model.

- Clients request and receive, servers can return objects in response to a request.

- HTTP uses TCP over port 80.

-

HTTP is stateless - No information about past client requests are held.

Protocols that maintain state are complex. The past history must be maintained and if the client or server crashes their views of state may become inconsistent.

Types of Connections

There are two different types of HTTP connection:

Non-Persistent HTTP

- TCP connection opened.

- At most one object sent over the TCP connection.

- TCP connection closed.

Persistent HTTP

- TCP connection opened.

- Multiple objects can be sent over a single TCP connection.

- The connection is closed when finished.

Round Trip Time (RTT)

This is the time for a small packet to travel from client to server and back.

For HTTP 1.0 (non-persistent) the response time is:

\[\text{HTTP Response Time}=2\times \text{RTT}+\text{File Transmission Time}\]

from the following sequence diagram:

sequenceDiagram

Note left of client: Initiate TCP

client ->> server: Open TCP

activate server

server ->> client: TCP Open

Note left of client: Request File

client ->> server: Request File URL

server ->> client: Transmit File

deactivate server

Note left of client: File Received

This is an issue as there is additional overhead in order to open and close the single TCP connections.

In HTTP 1.1 (persistent) you can have as little as one RTT for all referenced objects as the connection is left open.

Cookies

Cookies are used to maintain user state. Many websites use four components to achieve this:

- Cookie header line of HTTP response message.

- Cookie header line in next HTTP request message.

- Cookie file kept on user’s host, managed by user’s browser.

- Back-end database at website.

A cookie is just a unique ID number stored in a file.

sequenceDiagram

client ->> server: Usual HTTP request msg

server ->> database: Create ID entry

server ->> client: Usual HTTP response & "set-cookie:1678"

client ->> server: Usual HTTP request msg & "cookie:1678"

server -> database: Access

Note over server, database: Cookie specific action

server ->> client: Usual HTTP response msg

note left of client: One week later

client ->> server: Usual HTTP request msg & "cookie:1678"

server -> database: Access

Note over server, database: Cookie specific action

server ->> client: Usual HTTP response msg

HTTP Cookies

Cookies can be used for:

- Authorisation

- Shopping Carts

- Recommendations

- User Session State (Web E-Mail)

Cookies permit sites to learn a lot about you. Third party cookies allow a common identity to be tracked across multiple sites.

Web Caches (Proxy Servers)

Proxy servers allow a client request to be satisfied without involving the origin server.

- If the object is in the cache - The cache returns the object to the client.

- Else - The cache requests the object from the origin server, caches the object and sends the object to the client.

Web caching allows the following:

- Less response time for the client.

- Reducing traffic on an institutions access link.

- Enables poor content providers to operate more effectively.

Conditional GET

This allows servers to check if their cached object is up to date. This allows:

- No object transmission delay.

- Lower link utilisation.

You can specify the date of a cached copy in the request:

If-modified-since: <date>

If the copy is up to date the server will not return the object but the following response:

HTTP/1.0 304 Not Modified

Here is the sequence of interactions:

sequenceDiagram

client ->> server: Request & "If-modified-since:<date>"

alt Object not modified

server ->> client: "HTTP/1.0 304 Not Modified"

else Object modified

server ->> client: "HTTP/1.0 200 OK <data>"

end

DNS allows host-names to be mapped to IP addresses:

- The DNS is implemented as a distributed database on a hierarchy of many name servers.

- It is implemented in the application-layer to put complexity at the network edge.

DNS allows for the following:

- Host-name to IP address translation.

- Host aliasing.

- Mail server aliasing.

- Load distribution:

- Many IP addresses can correspond to one name.

Against Centralised DNS

There are many reasons why you don’t want DNS to be centralised:

- Single point of failure.

- Traffic volume.

- Can be physically distant from clients.

- Maintenance will take down the whole internet.

In order to scale the internet DNS is implemented as a distributed database.

Hierarchy

flowchart

r[Root DNS Servers] --> com[.com DNS Servers] & org[.org DNS Servers] & edu[.edu DNS Servers]

com --> yahoo[yahoo.com DNS Servers] & amazon[amazon.com DNS Servers]

org --> pbs[pbs.org DNS Servers]

edu --> nyu[nyu.edu DNS Servers] & umass[umass.edu DNS Servers]

subgraph Root

r

end

subgraph Top Level Domain

com

org

edu

end

subgraph Authorative

yahoo

amazon

pbs

nyu

umass

end

Root Name Servers

There are 13 logical root name servers with each server being redundant across the world. They are:

- Official last resort name servers.

- Managed by ICANN (Internet Corporation for Assigned Names and Numbers)

- Very important to the function of the internet.

Top Level Domains

TLD servers are responsive for all top level domains (.com, .org, .net, …) and all the country domains (.uk, .fr, .jp, …). There are companies responsible for all top level domains.

Authoritative Servers

There are organisations own DNS servers, providing authoritative host-name to IP mappings for an organisation named hosts.

These can be maintained by an organisation or the service provider.

Local DNS Name Servers

Each ISP has a default name server. When a host makes a DNS query it is sent to it’s local DNS server. This has:

- A cache of recent name-to-address translation pairs.

- Acts as a proxy, forwarding queries into the hierarchy.

Iterated Query

DNS also allows recursive queries.

In the worst case the following would happen when you search for a host-name via DNS:

- Client requests local DNS server.

- Local DNS server requests root DNS server for top level domain.

- Local DNS server requests TLD for authoritative domain.

- Authoritative responds with IP address.

- Local DNS server responds to client with IP address.

Caching Records

Once any name server learns a mapping it is cached:

- Cache entries timeout after some time (TTL).

- TLD servers are typically cache in the local name servers.

- This means taht the root name servers are not often visited.

- Cached entries may be out of date:

- If name host changes IP address it may not be known until all TTLs expire.

- Update and notify mechanisms are proposed in IETF standard RFC 2136.

DNS Record Types

DNS is a distributed databse that stores resource records (RR). The RR schema is:

RR(name, value, type, ttl)

There are several types:

type=A

name is the hostnamevalue is the IP address

type=CNAME

name is an alias name for some canonical name.value is the canonical name.

type=NS

name is the domain.value is the host-name of the authoritative name server for this domain.

type=MX

value is the name of the mail-server associated with name.

This lecture continues from The Application Layer - Network Applications.

File Distribution for Client-Server Model

To send a file to $N$ clients the server must upload $N$ file copies:

- Time to send one copy: $\frac F {u_s}$

- Time to send $N$ copies $\frac{NF}{u_s}$

Each client must download a file copy:

- $d_\min=$ minimum client download rate.

- Minimum client download time: $\frac F{d_\min}$

Therefore the time to distribute $F$ to $N$ clients using the client-server approach is:

\[D_{\text{client to server}}\geq\max\{\frac{NF}{u_s},\frac F{d_\min}\}\]

File Distribution for P2P

Server transmission must upload at least one copy:

- Time to send one copy: $\frac F{u_s}$

Each client must download a file copy:

- Minimum cloent download time: $\frac F{d_\min}$

Additional Clients as aggregate must download $NF$ bits:

- Max upload rate (limiting max download rate) is: $u_s +\sum u_i$

Therefore the time to distribute $F$ to $N$ client using a P2P approach is:

\[D_\text{P2P}>\max\{\frac F{u_s},\frac F{d_\min},\frac{NF}{u_s+\sum u_i}\}\]

As the service capacity increases linearly with $N$ the service is self-scaling.

BitTorrent

- File is divided into 256Kb chunks.

- Peers in the torrent send and receive file chinks.

There are two parties:

- Tracker - Tracks peers participating in the torrent.

- Torrent - The group of peers exchanging chunks of a file.

When a new peer arrives:

- They obtain a list of peers from the tracker.

- Begin exchanging file chunks with the peers in the torrent.

File Chunks

Requesting chunks:

- At any given time, different peers have different chunks.

- Periodically, you ask each peer for a list of chunks that they have.

- You request missing chunks from peers, rares first.

Sending chunks (tit-for-tat):

- You send chunks to those sending you chunks at the highest rate.

- Other peers are choked.

- Re-evalulate to peers every 10 seconds.

- Every 30 seconds, randomly seelct another peer to start sending chunks.

- Optimistically un-choke this peer.

- This new peer may gain rate.

Higher upload rate gets better trading partners, allowing you to get the file faster.

A recap of the content is in Java Socket Programming Recap & Assignment 1.

A socket is a door between the application process and the transport layer. There are two socket types, one for each transport layer (UDP/TCP).

UDP

There is no explicit connection between the client and the server:

- No handshake before data is sent.

- Sender explicitly attache the IP destination address and port to each packet.

- Receiver extracts the sender IP address and port from the received packet.

Transmitted data may be lost or received out of order.

UDP provides unreliable transfer of groups of bytes (data-grams) between client and server.

Example Program

The client in this example sends a string the the server over UDP. The server then converts the string to upper-case and then sends it back.

Java UDP Client

import java.io.*;

import java.net.*;

class UDPClient {

public static void main(String args[]) throws Exception {

// create input stream

BufferedReader inFromUser = new BufferedReader(new InputStreamReader(System.in));

// create client socket

DatagramSocket clientSocket = new DatagramSocket();

// translate host name to IP address using DNS

InetAddress IPAddress = InetAddress.getByName("hostname");

byte[] sendData = net byte[1024]; // message limited to 1024 characters

byte[] receiveData = new byte[1024];

// read message from terminal

String sentence = inFromUser.readLine();

// convert string to byte array

sendData = sentence.getBytes();

// create data-gram with data to send, length, IP address and port

DatagramPacket sendPacket = new DatagramPacket(sendData, sendData.length, IPAddress, 9876);

// send data-gram to server

clientSocket.send(sendPacket);

DatagramPacket receivePacket = new DatagramPacket(receiveData, receiveData.length);

// read data-gram from server

clientSocket.receive(receivePacket);

// extract data from packet

String modifiedSentence = new String(receivePacket.getData());

System.out.println("FROM SERVER:" + modifiedSentence);

clientSocket.close();

}

}

Java UDP Server

import java.io.*;

import java.net.*;

class UDPServer {

public static void main(String args[]) throws Exception {

// create data-gram socket at port 9876

DatagramSocket serverSocket = new DatagramSocket(9876);

byte[] receiveData = new byte[1024];

byte[] sendData = new byte[1024];

while(true) {

// create space for received data-gram

DatagramPacket receive Packet = new DatagramPacket(receiveData, receiveData.length);

// receive data-gram

serverSocket.receive(receivePacket);

String sentence = new String(receivePacket.getData());

// get IP address and port of sender

InetAddress IPAddress = receivePacket.getAddress();

int port = receivePacket.getPort();

String capitalisedSentence = sentence.toUpperCase();

sendData = capitalisedSentence.getBytes();

// create data-gram to send to client

DatagramPacket sendPacket = new DatagramPacket(sendData, sendData.length, IPAddress, port);

// write out data-gram to socket

serverSocket.send(sendPacket);

}

}

}

Compared to UDP there are some differences:

The client must contact the server:

- The server process must first be running.

- The server must have created a socket that welcomes the client’s contact.

Clients contact the server by:

- Creating TCP socket, specifying IP address, port number of server process.

- When the cloent creates a socket the slcinet TCP establishes connection to server TCP.

When contacted by the client, the server TCP creates a new socket for the server process to communcate with that particular client:

- This allows the server to talk iwht multiple clients.

- Source port number are used to distinguish clients.

TCP provides a reliable, in-order byte-stream transfer (pipe).

Example Program

Like the UDP example the client sends a string the the server over TCP. The server then converts the string to upper-case and then sends it back.

Java TCP Client

import java.io.*;

import java.net.*;

class TCPClient {

public static void main(String argv[]) throws Exception {

String sentence;

String modifiedSentence;

// create input stream

BufferedReader inFromUser = new BufferedReader(new InputStreamReader(System.in));

// create client socket and connect to server

Socket clientSocket = new Socket("hostname", 6789);

// create output stream attached to socket

DataOutputStream outToServer = new DataOutputStream(clientSocket.getOutputStream());

// create input stream attached to socket

Buffered Reader inFromServer = new BufferedReader(new InputStreamReader(clientSocket.getInputStream()));

sentence inFromUser.readLine();

// send line to server

outToServer.writeBytes(sentence +'\n');

// read line from server

modifiedSentence = inFromServer.readLine();

System.out.println("FROM SERVER: " + modifiedSentence);

clientSocket.close();

}

}

Java TCP Server

import java.io.*;

import java.net.*;

class TCPServer {

public static void main(String argv[]) throws Exception{

String clientSentence;

String capitalisedSentence;

// create welcoming socket at port 6789

ServerSocket welcomeSocket = new ServerSocket(6789);

while(true) {

// wait on welcoming socket for contact by client

Socket connectionSocket = welcomeSocket.accept();

// create input stream attached to socket

BufferedReader inFromClient = new BufferedReader(new InputStreamReader(connectionSocket.getInputStream()));

// create output stream attached to socket

DataOutputStream outToClient = new DataOutputStream(connectionSocket.getOutputStream());

// read in line from socket

clientSentence = inFromClient.readLine();

capitalisedSentence = clientSentence.toUpperCase() +'\n';

// write out line to socket

outToClient.writeBytes(capitalisedSentence);

}

}

}

The transport layer provides logical communication between application processes running on different hosts.

- Sender - Breaks the application messages into segments and passes to the network layer.

- Receiver - Reassembles the segments into messages and passes them to the application layer.

There are two transport layer protocols available:

Network Layer vs. Transport Layer

The network layer is a logical communication between hosts.

This is similar to the postal service.

The transport layer extends this network service to provide a logical communication between processes:

- It relies on and enhances network layer services.

Transport Layer Actions

The sender:

- Is passed an application-layer message.

- Determines segment header fields values.

- Creates a segment.

- Passes the segment to IP.

The receiver:

- Receives a segment from IP.

- Checks header values.

- Extracts the application-layer message.

- Demultiplexes the message up to the application via a socket.

Two Principle Internet Transmission Protocols

TCP (Transmission Control Protocol):

- Reliable, in-order delivery.

- Congestion Control

- Flow Control

- Connection Setup

UDP (User Datagram Protocol)

- Unreliable, unordered delivery.

- No-frills extension of “best-effort” IP.

The following services are not available:

- Delay Guarantees

- Bandwidth Guarantees

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

Demultiplexing

Each IP datagram carries the source and destination IP address and a transport-layer segment. This segment includes:

- Source Port

- Destination Port

- Other Header Fields

- Application Data

Connection-less Demultiplexing

This is used by UDP. To create a socket you can use the following Java code:

DatagramSocket socketName = new DatagramSocket(12345);

IP/UDP datagrams with same destination port #, but different source IP addresses and/or source port numbers will be directed to same socket at receiving host.

Connection-Oriented Demultiplexing

TCP socket is identified by a 4-tuple:

- Source IP Address

- Source Port Number

- Destination IP Address

- Destination Port Number

The receiver uses all four values (4-tuple) to direct the segment to the appropriate socket.

Server may support many simultaneous TCP sockets:

- Each socket is identified by its own 4-tuple.

- Each socket is associated with a different connecting client.

Differences

- UDP - Demultiplexing using destination port number (only).

- TCP - Demultiplexing using 4-tuple: source and destination IP addresses, and port numbers.

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

Connection-Less Transport

This means that there is:

- No handshaking between sender, receiver.

- Each UDP segment handled independently of others.

This saves time, is simpler and gives a small header size.

UDP Uses

UDP is used for the following:

- Streaming multimedia apps (loss tolerant, rate

sensitive).

- DNS

- SNMP

- HTTP/3

If reliable transfer needed over UDP (HTTP/3):

- Add needed reliability at application layer.

- Add congestion control at application layer.

A UDP segment is laid out like the following:

| Source Port |

Destination Port |

| Length |

Checksum |

| Payload |

Payload |

| … |

|

The segment is 32 bits wide.

Internet Checksum

Checksums are used to find flipped bits in transmission.

To calculate the internet checksum:

- Treat the whole contents of the UDP segment as a sequence of 16-bit integers.

-

Complete a one’s complement sum of the integers.

Wraparound the carry-out to the beginning of the addition.

- Put the checksum value in the UDP checksum field.

This only adds weak protection against single bit flips.

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

Recovering From Errors

We can use acknowledgements and negative acknowledgements from the client to the sender:

Corrupted Acknowledgements

If an acknowledgement is lost then the sender will send a duplicate packet. We can add sequence numbers to keep track of if a packet is a duplicate:

- For stop and wait we can alternate between 0 and 1.

- If there is a duplicate the packet is dropped and another acknowledgement is sent.

Packet Loss

A timer can be put on each packet:

- If an ACK is not received in time the packet is re-transmitted.

- If the re-transmission is a duplicate then the sequence numbers will handle this.

Twice the round trip time is a reasonable starting point for the timer.

Stop & Wait Sequence

Sender:

- Sequence number is added to the packet.

- Send the packet and start timer.

- Check if the ACK is corrupt or a NACK, if it is send the same packet.

- Send the next packet if an ACK is received.

- If packet times out, send it again.

Receiver:

- Must check if received packet is duplicate.

- State indicates whether 0 or 1 is expected packet sequence number.

- If packet is duplicate send ACK.

- If packet is in order then send ACK and pass to the application layer.

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

Calculating Utilisation

Stop and wait protocols are inefficient as ideally they can only send one packet per round trip time.

To calculate the time to transmit a 1 MB packet into a 1 Gbps channel:

\[D_\text{trans}=\frac LR=\frac{8000\text{ bits}}{10^9\text{ bits/sec}}=8\mu s\]

Where:

- $D$ - Time to transmit packet.

- $L$ - Length of the packet.

- $R$ - Rate of transfer.

To find the utilisation of the medium we can use the following:

\[U_\text{sender}=\frac{\frac LR}{\text{RTT}+\frac LR}\]

With the previous packet, link speed, 30 ms rtt and a stop and wait protocol we can calculate the following:

\[U_\text{sender}=\frac{0.008}{30+0.008}=0.00027\]

This is only 0.027% utilisation.

Pipelining

This allows us to send multiple packets before the first one is acknowledged. There are two main families of protocols:

Go-Back-N Protocol

Sender

There is a window of up to $N$, consecutive transmitted but unACKed packets:

- Cumulative ACK - We must acknowledge all packets up to and including the sequence number included in the client’s ACK.

- On receiving ACK(n) - Move window forward to begin at $n+1$.

- Timer is used for the oldest in-flight packet.

- When the timer runs out, re-transmit packet $n$ and all higher sequence number packets in the window.

Due the reuse of sequence numbers we use sequence numbers of $>2N$ (more than 2 times the window size).

Receiver

This is ACK only:

- Always send ACK for correctly-received packet so far, with highest in-order sequence number.

- May generate duplicate ACKs

- Need only remember the next expected sequence number.

On receipt of out-of-order packet:

-

Can discard (don’t buffer) or buffer for later.

This is an implementation decision.

-

Re-ACK packet with highest in-order sequence number.

Selective Repeat Protocol

In this method the receiver individually acknowledges all correctly received packets.

- Buffers packets, as needed, for eventual in-order delivery to the upper layer.

The sender times-out/re-transmits individually for each unACKed packet:

- Sender maintains timer for each unACKed packet.

The sender window:

- Has a size of $N$ consecutive seq numbers.

- The timers keep track of unACKed packets.

Sender

Data from above:

- If next available sequence number in window, send packet.

Timeout(n):

- Resend packet $n$, restart timer.

ACK(n) in [sendbase,sendbase+N]:

- Mark packet $n$ as received.

- If $n$ smallest unACKed packet, advance window base to next unACKed sequence number.

Receiver

Packet $n$ in [rcvbase, rcvbase+N-1]:

- send ACK(n)

- Out-of-order - buffer.

- In-order - deliver (also deliver buffered, in-order packets), advance window to next not-yet-received packet.

Packet $n$ in [rcvbase-N,rcvbase-1] (already received):

Otherwise:

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

TCP Features

TCP has the following features:

- Point-to-Point - one sender, one receiver

- Reliable, In-Order Byte Stream - no “message boundaries”

- Full Duplex Data:

- Bi-directional data flow in same connection.

- MSS - maximum segment size.

- Cumulative ACKs

- Pipelining - TCP congestion and flow control set window size.

- Connection-Oriented - Handshaking (exchange of control messages) initialises sender, receiver state before data exchange.

- Flow Controlled - Sender will not overwhelm receiver.

TCP Segment Structure

- Sequence Numbers - Count the bytes of data in the byte-stream (not the sequence of the segments).

- Acknowledgements:

- Acknowledge the sequence number of the next byte expected from the other side.

- Cumulative ACK.

TCP Sequence Numbers & ACKs

Here is an example of a simple telnet scenario:

sequenceDiagram

note left of A: User types 'C'

A -->> B:Seq=42, ACK=79, data='C'

note right of B: B ACKs receipt of 'C', echoes back 'C'

B -->> A:Seq=79, ACK=43, data='C'

note left of A: A ACKs receipt of echoed 'C'

A -->> B:Seq=43, ACK=80

TCP Round Trip Time & Timeout

The timeout should be longer than the round trip time (rtt) but the rtt varies:

- Too Short - Premature timeout, unnecessary re-transmission.

- Too Log - Slow reaction to segment loss.

To get a SampleRTT we can measure the time from segment transmission until ACK receipt (ignoring re-transmissions). We want to smooth this out so we sample several recent rtts.

To estimate the round trip time we use an exponential weighted moving average:

\[\text{EstimatedRTT} = (1-\alpha)\times \text{EstimatedRTT} + \alpha \times \text{SampleRTT}\]

The influence of past samples decrease exponentially.

To calculate the timeout interval we include a safety margin that takes into account the variance of the SampleRTT:

\[\text{TimeoutInterval} = \text{EstimatedRTT} + 4\times\text{DevRTT}\]

DevRRT is the safety margin. It is calculated as an exponential weighted moving average of SampleRTT deviation from EstimatedRTT:

\[\text{DevRTT} = (1-\beta)\times\text{DevRTT} + \beta\times\lvert\text{SamepleRTT}-\text{EstimatedRTT}\rvert\]

Typically $\beta=0.25$

TCP Sender (Simplified)

This is a simplified sender that ignores duplicate ACKS, flow control and congestion control.

Data received from application:

- Create segment with sequence number.

- Sequence number is the byte-stream number of the first data byte in the segment.

- Start timer if not already running.

- The timer is for the older unACKed segment.

- Expiration interval is the

TimeOutInterval.

Timeout:

- Re-transmit segments that caused the timeout.

- Restart the timer.

ACK Received:

- If ACK acknowledges previously unACKed segments:

- Update what is known to be ACKed.

- Start timer if there are still unACKed segments.

Receiver ACK Generation

This covers RFC 5681:

| Event at Receiver |

TCP Receiver Action |

| Arrival of in-order segment with expected sequence number. All data up to expected sequence number already ACKed. |

Delayed ACK. Wait up to 500ms for next segment. If no segment send ACK. |

| Arrival of in-order segment with expected sequence number. One other segment has ACK pending. |

Immediately send single cumulative ACK, ACKing both in-order segments. |

| Arrival of out-of-order segment higher-than-expected sequence number. Gap detected. |

Immediately send duplicate ACK, indicating sequence number of next expected byte. |

| Arrivial of segment that partially completely fills gap. |

Immediately send ACK, provided that segment start at lower end of gap. |

TCP Fast Re-transmit

If a sender receives three additional ACKs fro the same data (triple duplicate ACKs), resend unACKed segment with the smallest sequence number:

- It is likely that unACKed segment was lost:

- We can resend the data and save waiting for the timeout.

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

TCP Flow Control

This is where the receiver sends messages to the sender to slow the rate of transfer. This is so that the incoming data doesn’t overflow the buffer by sending data quicker than it can be processed.

- Free space is advertised in the

rwnd field of the TCP header.

RcvBuffer is set by the socket options (typically 4096 bytes).

- Many operating systems auto adjust

RcvBuffer.

flowchart

subgraph RcvBuffer

us[Used Space]

rwnd["rwnd (Free Space)"]

end

p[TCP Segment Payloads] --> RcvBuffer

RcvBuffer --> a[Application Process]

-

Sender limits the amount of unACKed data to received rwnd.

This guarantees the receive buffer will not overflow.

TCP Connection Management

Before exchanging data the sender and receiver need to handshake. This includes:

- Accepting the incoming connection.

- Agreeing on connection parameters.

Two-Way Handshake

A 2-way handshake is problematic as is gives issues when used over an unreliable medium. Ordinarily it would work like so over a port number $x$:

sequenceDiagram

Client ->> Server: req_conn(x)

note right of Server: ESTAB

Server ->> Client: acc_conn(x)

note left of Client:ESTAB

This can produce half-open connections and duplicate data if the handshake messages are lost and re-transmitted.

Three-Way Handshake

This is a more redundant handshake method than resolved the issue of a two way handshake:

sequenceDiagram

note left of Client: Choose init seq num x, send TCP SYN msg

Client ->> Server: SYNbit=1, Seq=x

note right of Client: SYN SENT

note right of Server: Choose init seq num y, send TCP SYNACK msg ACKing SYN

note left of Server: SYN RCVD

Server ->> Client: SYNbit=1, Seq=y, ACKbit=1, ACKnum=x+1

note right of Client: ESTAB

note left of Client: Received SYNACK(x) indicates server is live.<br>Send ACK for SYNACK, this segment may contain client-to-server data.

Client ->> Server: ACKbit=1, ACKnum=y+1

note left of Server: ESTAB

note right of Server: Received ACK(y) indicates client is live.

Closing a TCP Connection

- Client and server each close their side of the connection:

- Send TCP segment with

FIN bit = 1

- Respond to received

FIN with ACK.

- On receiving

FIN, ACK can be combined with on FIN.

Simultaneous FIN exchanges can be handled.

sequenceDiagram

Client ->> Server: FINbit=1, seq=x

note left of Client: Can no longer send but can receive data.

note right of Server: Can still send data.

note right of Client: FIN_WAIT_1

note left of Server: CLOSE_WAIT

Server ->> Client: ACKbit=1, ACKnum=x+1

note left of Client: Wait for server to close.

note right of Client: FIN_WAIT_2

note left of Server: LAST_ACK

Server ->> Client: FINbit=1, seq=x

note right of Server: Can no longer send data.

note left of Client: Timed wait for 2*max segment lifetime.

note right of Client: TIMED_WAIT

Client ->> Server: ACKbit=1, ACKnum=y+1

note left of Server: CLOSED

note right of Client: CLOSED

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

Congestion

Congestion is where too many sources are sending too much data too fast for the network to handle. This can give:

This is different from flow control where one sender is sending data too fast.

End to End Congestion Control

- No explicit feedback from the network.

- Congestion is inferred from observed loss and delay.

- Approach taken from TCP.

Network Assisted Congestion Control

- Routers provide direct feedback to sending and receiving hosts with flows passing through congested router.

- May indicate congestion level or explicitly set a sending rate.

This is used in the: TCP ECN, ATM and DECbit protocols.

TCP Congestion Control - AMID

AMID is a distributed, asynchronous algorithm that optimised congested flow rates network wide.

Sender can increase sending rate until packet loss (congestion) occurs, then decrease the sending rate on loss event.

We do this by using the following two methods:

- Additive Increase

- Increase the sending rate by 1 maximum segment size every RTT until loss is detected.

- Multiplicative Decrease

- Cut the sending rate in half at each loss event.

This results in a saw-tooth behaviour as the protocol probes from bandwidth.

Multiplicative Decrease

In addition to the definition before the sending rate is:

- Cut in half on loss detected by triple duplicate ACK. (TCP Reno)

- Cut to 1 maximum segment size (MSS) when loss is detected by timeout. (TCP Tahoe)

Congestion Window

The congestion window is the number of unACKed packets that can be in flight at any one time.

The behaviour roughly is:

-

Send cwnd bytes, wait for RTT for ACKS, then send more bytes.

\[\text{TCP Rate} \approx\frac{\text{cwnd}}{\text{RTT}}\text{ (bytes/sec)}\]

-

TCP sender limite transmission:

\[\text{LastByteSent} - \text{LastByteAcked}\leq \text{cwnd}\]

-

cwnd is dynamically adjusted in response to observed network congestion (implementing TCP congestion control).

TCP Slow Start

When connection begins, increase the rate exponentially until first loss event:

- Initially

cwnd = 1 MSS

- Double

cwnd every RTT.

- Done by incrementing

cwnd for every ACK received.

To avoid congestion we can switch to linear increases in the cwnd:

- When

cwnd gets to $\frac12$ of its value before timeout.

We can do this with the following implementation:

- Have a variable called

ssthresh.

- On loss event,

ssthresh is set to $\frac12$ of cwnd just before loss event.

TCP Fairness

For a system to be fair, if $K$ TCP sessions share the same bottleneck link of bandwidth $R$, each should have an average rate of $\frac R K$.

TCP is fair as with two competing TCP sessions:

- Additive increase gives slope of 1, as throughput increases.

- Multiplicative decrease decreases throughput proportionally.

This results in equal bandwidth provided both clients have the same RTT.

You can open more TCP connections to the same server to make your connection “unfair”.

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

You may want a protocol that is tailored to a specific scenario such as:

- Long fat pipes (many in flight packets).

- Wireless networks (noisy links with high bandwidth).

- Long delay links (long RTT).

- Data centre networks (latency sensitive).

- Low priority TCP traffic.

To facilitate this you can implement transport-layer functions in the application layer, on top of UDP.

QUIC - Quick UDP Internet Connections (HTTP/3)

This is an application layer protocol on top of UDP:

- Increases the performance of HTTP

- Uses a slimmed HTTP/2 protocol and QUIC instead of TLS.

This protocol has the following features:

- Error and Congestion Control - Similar to TCP

- Connection Establishment - Reliability, congestion control, authentication, encryption and state all in one RTT.

- Normally there would be two handshakes, one for TCP and one for TLS.

- Multiple application-level streams can be multiplexed over a single QUIC connection.

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

Network Layer

The network layer completes two functions:

- Forwarding - Moving packets from a router’s input link to the appropriate router output link.

- Routing - Determining the route taken by packets from source to destination.

Data Plane

This is a local, per-router, function. It determines how a datagram arriving on a routers input port is forwarded to the router output port.

Control Plane

This is a network wide logic. It determines how data is routed across the network.

Per-Router Control Plane

This in an individual routing algorithm that is implemented in each router to interact in the control plane:

- Each router has a table that is can use to forward packets towards its detonation.

- The tables are generated by a distributed algorithm, running on the routers.

Software-Defined Networking (SDN) Control Plane

A remote controller computes and installs forwarding tables in routers.

Routers

Routers generally have the following structure:

flowchart

rp --> lfq

subgraph rip[Router Input Ports]

lt[Line Termination] --> llp[Link Layer Protocol] --> lfq[Lookup, Forwarding & Queuing]

end

rip --> hssf[High-Speed Switching Fabric]

hssf --> rop

subgraph rop[Router Output Ports]

olfq[Datagram Buffer] --> ollp[Link Layer Protocol] --> olt[Line Termination]

end

rp[Router Processing] <--> hssf

subgraph cp["Control Plane (milliseconds)"]

rp

end

subgraph dp["Data Plane (nanoseconds)"]

rip

hssf

rop

end

The ports are structured as follows:

- Line Termination - Physical layer.

- Link Layer - Ethernet.

- Lookup, Forwarding & Queuing - Decentralised switching using the header field values to lookup the output port using a forwarding table.

- This should be completed at line speed.

- Input port queuing can be used if datagrams arrive faster than forwarding rate into the switch fabric.

- Head of the line (HOL) blocking - A datagram at the front of the queue prevents others in the queue from moving forward.

- If too many packets are buffered then they can be dropped and lost.

Longest Prefix Matching

When looking for forwarding table entry for a given destination address, use the longest address prefix that matches the destination address.

| Destination Address Range |

Link Interface |

| 10.24.0.0/16 |

0 |

| 10.24.0.0/24 |

1 |

| 16.2.0.0/20 |

2 |

| Otherwise |

3 |

- 10.24.0.2 would go on interface 1 as it matches more of 10.24.0.0/24 than 10.24.0.0/16.

The number after the slash is the number of bits that define the subnet.

Switching Fabrics

You can switch using the following topologies:

- Switching Via Memory - The packet is copied to the system memory and then the output port.

- This crosses the system bus twice.

- Limited by the memory bandwidth.

- Switching Via Bus - The input port memory is connected directly to the output port memory.

- Switching speed limited by bus bandwidth.

- One packet can be on the bus at a time.

- Switching Via Interconnection Network - A grid of switched interconnects are used that can be used to reduce contention between the input ports.

- You can use multiple stages of switches to send multiple packets at once.

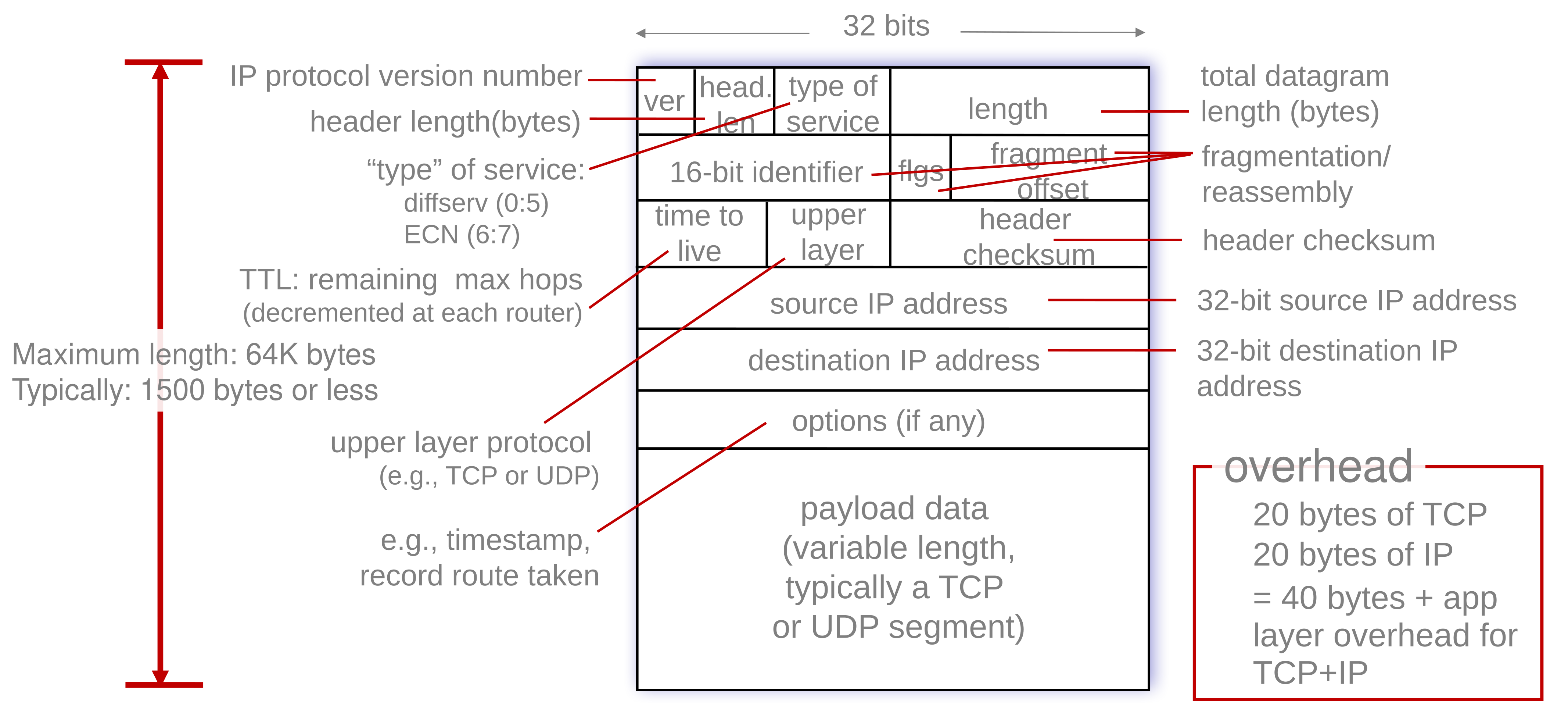

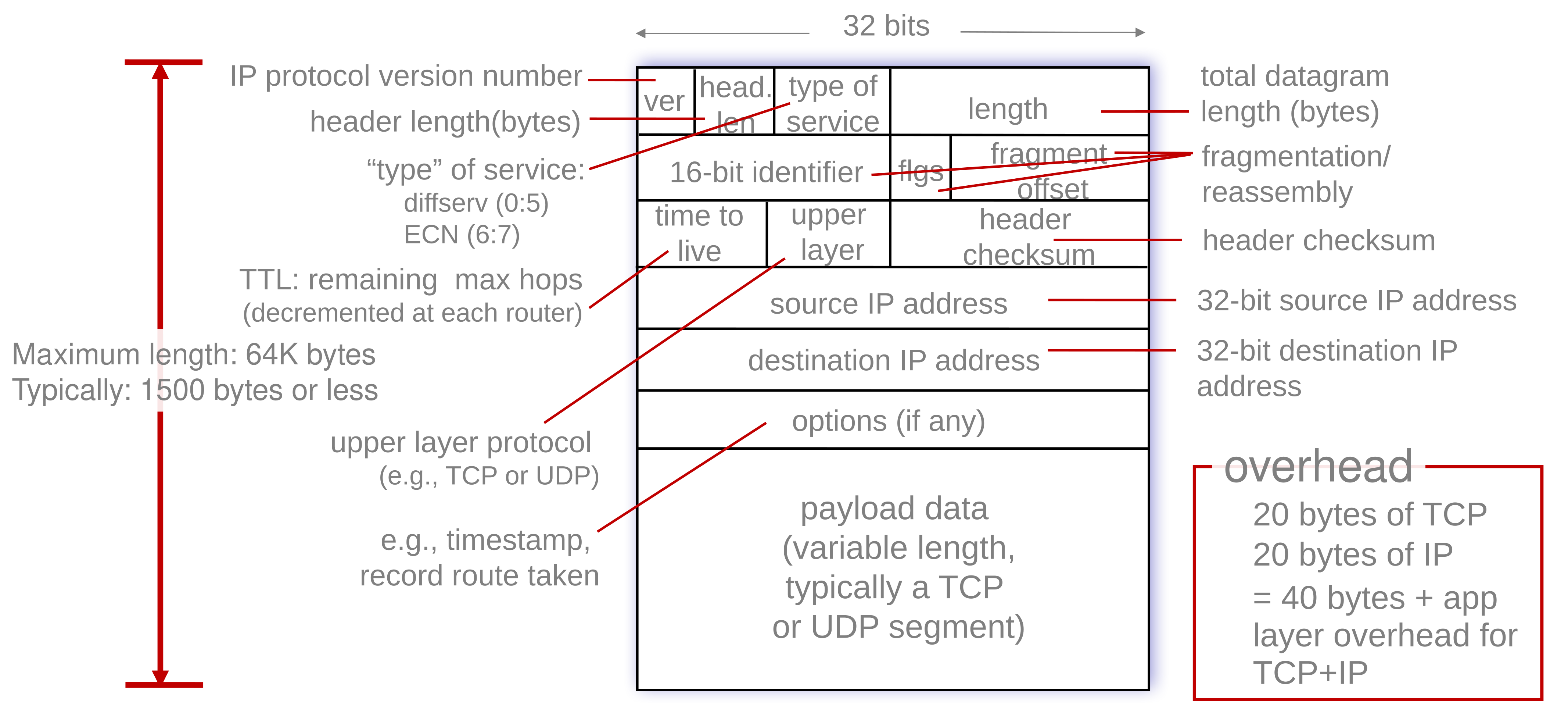

Internet Protocol (IP)

The IPv4 datagram is laid out like so:

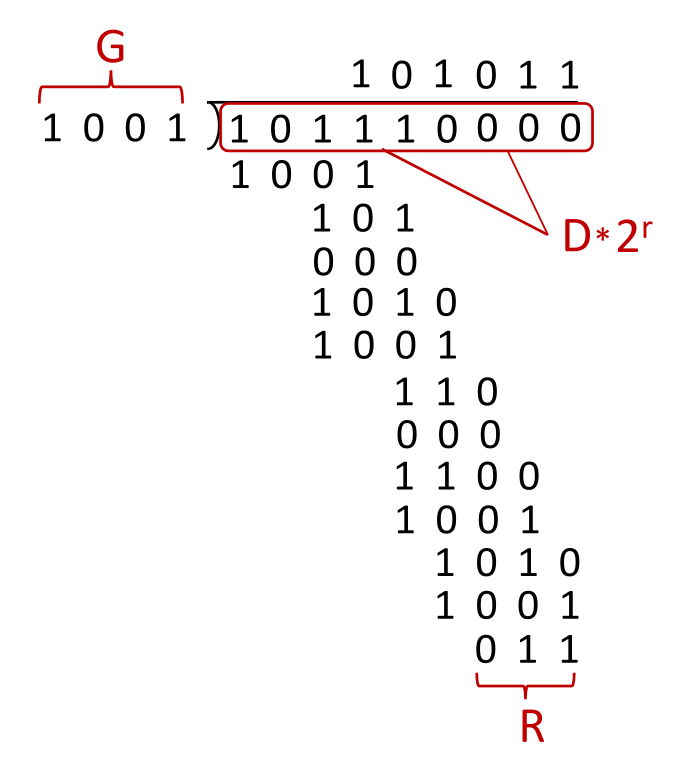

Fragmentation

The link layer can have a maximum transfer unit (MTU). As a result, the network layer, IP datagram must be fragmented into several that must be reassembled at the destination.

For example, with an MTU of 1500 bytes the following datagram:

| Length |

ID |

FragFlag |

Offset |

| 4000 |

x |

0 |

0 |

Would be split into the following fragments:

| Length |

ID |

FragFlag |

Offset |

| 1500 |

x |

1 |

0 |

| Length |

ID |

FragFlag |

Offset |

| 1500 |

x |

1 |

185 |

| Length |

ID |

FragFlag |

Offset |

| 1040 |

x |

0 |

370 |

Each fragment has it’s own 20 byte header, which means that the total data transferred in this example is 40 bytes more than using a single datagram.

The offset is the number of bytes offset divided by 8. This means the data can only be fragmented every 8 bytes.

Addressing

Each network interface has an assigned IP address.

- Subnets

- Subnets are a group of devices that can access each-other without passing through a router.

- Devices in the same subnet have the same high-order bits.

- Subnet Mask - Defines the number of bits that make up the subnet part of the IP address: 192.168.0.1/24.

CIDR

Classless inter-domain routing is a method of defining the subnet and host part of an IP address:

\[\underbrace{11001000\ 00010111\ 0001000}_\text{subnet part}\underbrace{0\ 00000000}_\text{hostname part}\\\]

\[200.23.16.0/23\]

DHCP

There are two ways of getting an IP address on a network:

- Setting a non-conflicting IP address manually.

- Dynamic host configuration protocol from a server on the network you are connecting to.

- Allows for reuse of inactive addresses.

- Can renew a lease on address in use.

sequenceDiagram

Client ->> Server:DHCP Discover

Server ->> Client:DHCP Offer (Send IP address.)

note left of Client: The above steps can be skipped if a previous IP has been assigned.

Client ->> Server:DHCP Request (Send IP address back.)

Server ->> Client:DHCP ACK

You can send broadcast traffic on a network by sending to 255.255.255.255. Port 67 is used for DHCP.

All of this is done as broadcast so multiple DHCP servers on the same network know what IP addresses are used.

DHCP can also offer the following:

- Address of first-hop router.

- Name and IP address of DNS server.

- Network mask.

Network Address Translation

All device in a local network share just one IPv4 address as far as the outside world is concerned.

nwdiag {

network ISP {

Modem [address = "138.76.29.7"];

}

network Local {

address = "10.0.0.0/24"

Modem [address = "10.0.0.1"];

Device1 [address = "10.0.0.2"];

Device2 [address = "10.0.0.3"];

Device3 [address = "10.0.0.4"];

}

- All datagrams leaving have the same source NAT IP (138.76.29.7) but different source port numbers.

- Datagrams with source or destination in the “Local” network have a 10.0.0.0/24 address for the source or destination (as usual).

- Devices in the local network can only have the following local prefixes:

- 10/8

- 172.16/12

- 192.168/16

This works on the router in the following sequence:

- Host 10.0.0.2 sends datagram to 128.119.40.186:80.

- NAT router changes datagram source address from 10.0.0.2:3345 to 138.76.29.7:5001 and notes this in the table.

- Reply arrives from the destination with address of 138.76.29.7:5001.

- The port is looked up in the table and is forwarded to the correct client on the local network.

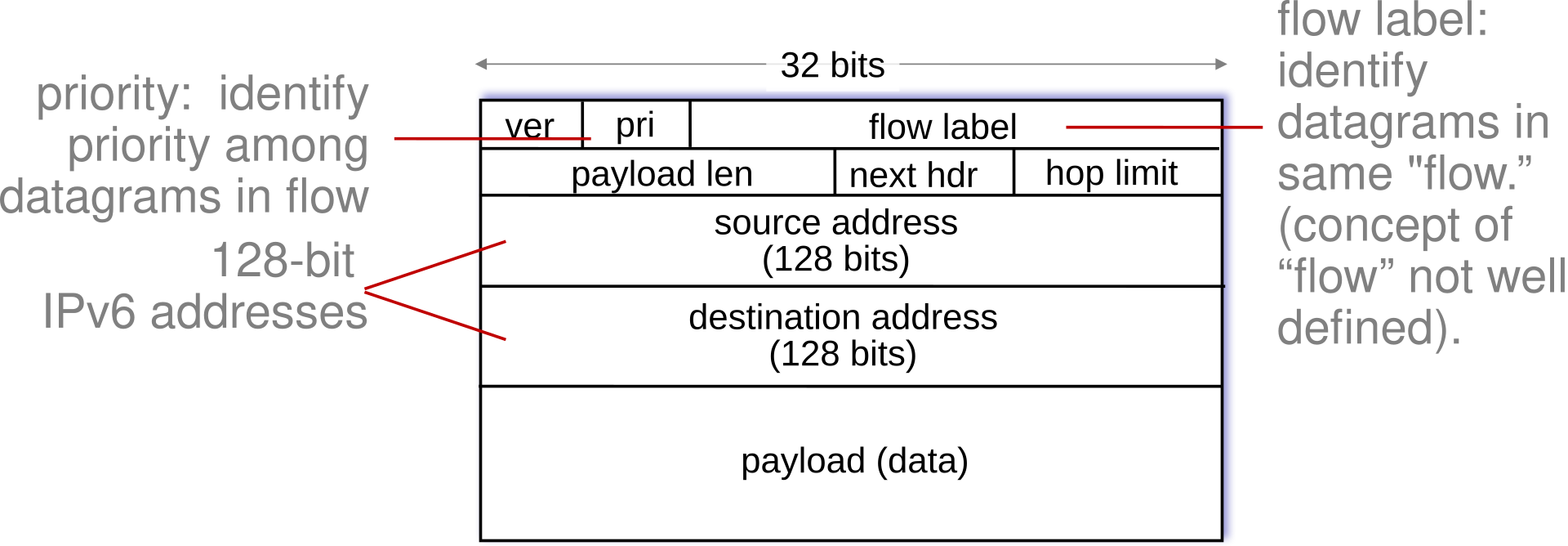

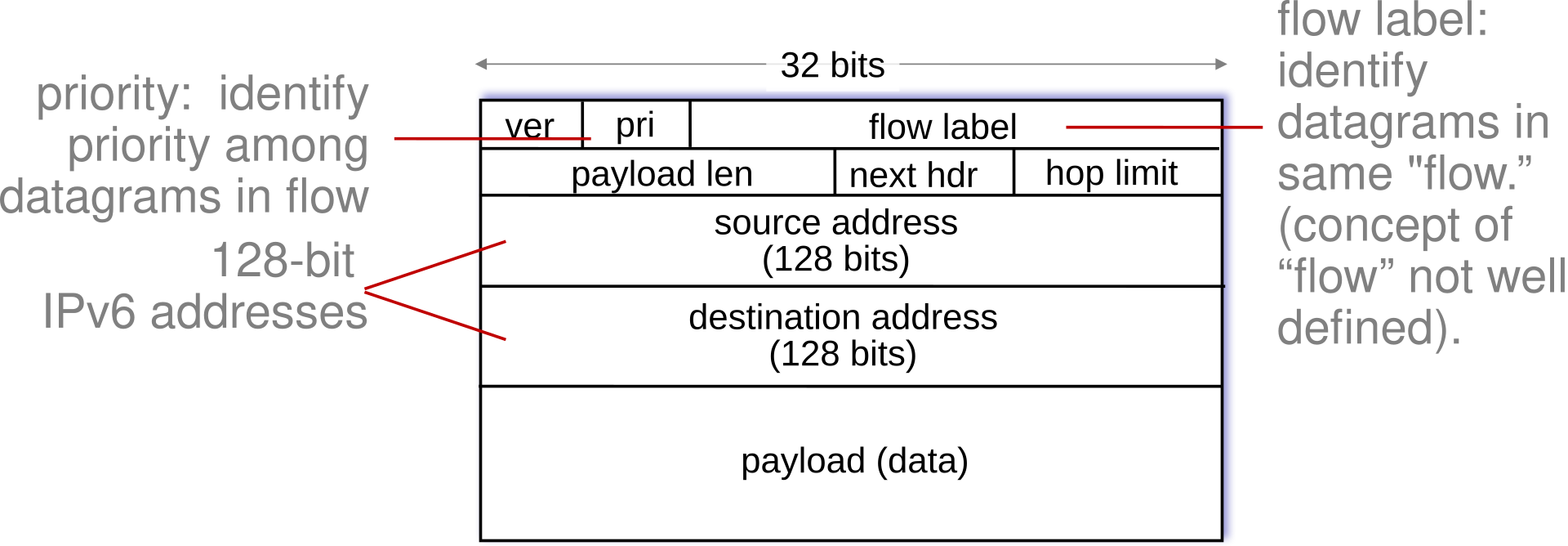

IPv6

IPv4 only used a 32-bit addresses. IPv6 allows for every device to have it’s own unique address on the internet using a 128-bit address. The datagram is laid out like so:

- No checksum (to speed processing as routers)

- No fragmentation or reassembly

- No options

Tunnelling & Encapsulation

To send an IPv6 datagram through a router that only supports IPv4 it must be encapsulated inside an IPv4 packet.

- Routers at the edge of the tunnel should be configured to pack and unpack the IPv6 datagrams.

This is slower through the legacy tunnel but is quicker through the rest of the network.

Generalised Forwarding & SDN

Each router contains a forwarding table (flow table). When a packet arrives we complete a match plus action:

- Match bits in arriving packet and take action.

Simple routers use destination based forwarding where packets are forwarded based on the destination IP address.

Generalised forwarding includes the following features:

- Many IP header fields can determine actions.

- Additional actions are possible including: drop, copy, modify, log.

Match & Action

The flow of packets is enabled by flow tables like so:

Generalised forwarding can use the following in it’s packet handling:

- Match - Pattern values in packet header fields.

- Actions _ For matched packet such as: drop, forward, modify, send to controller.

- Priority - Disambiguate overlapping patterns.

- Counters - Number of bytes and number of packets.

This can be useful for dropping or investigating malicious traffic.

OpenFlow

OpenFlow tables can complete generalised forwarding using headers in the:

- Link Layer (MAC, Ethernet, VLANs)

- Network Layer (IP)

- Transport Layer (TCP/UDP)

The tables are laid out like so:

| Switch Port |

MAC src |

MAC dst |

Eth type |

VLAN ID |

VLAN Pri |

IP Src |

IP Dst |

IP Prot |

IP TOS |

TCP s-port |

TCP dport |

Action |

| * |

* |

* |

* |

* |

* |

* |

* |

* |

* |

* |

22 |

drop |

Drop all traffic on port 22 (SSH).

You can then use these tables to complete the following actions:

- Router

- Match - Longest destination IP prefix.

- Action - Forward out a link.

- Firewall

- Match - IP addresses and TCP/UDP port numbers.

- Action - Permit or deny.

- Switch

- Match - Destination MAC address

- Action - Forward or flood (broadcast).

- NAT

- Match - IP address and port.

- Action - Rewrite address and port.

Orchestrated tables can create network-wide behaviour by connecting a set of routers to an OpenFlow server.

These notes are low-effort, due to catching up in this module. See the videos and slides for more detail.

Routing Algorithms

Routing algorithms choose a sequence of routers for a packet to traverse from source to destination.

We can define the cost of a hop by using link costs:

graph LR

subgraph G

u ---|2| v

v ---|2| x

u ---|1| x

v ---|3| w

x ---|3| w

w ---|1| y

x ---|1| y

w ---|5| z

y ---|2| z

end

This graph can be represented as:

\[G=(N,E)\]

Where $N$ is the set of routers:

\[\{u,v,w,x,y,z\}\]

and $E$ is the set of links:

\[\{(u,v),(u,x),(v,x),(v,w),(x,w),(x,y),(w,y),(w,z),(y,z)\}\]

Classifying Routing Algorithms

Algorithms can have some the following conflicting classifications:

Intra-AS Routing (OSPF)

In this scenario you want a routing algorithm that has the best performance.

Autonomous systems (AS) are a single domain:

- All router in an AS must run the same intra-domain protocol.

- Routers in different AS may run any protocol

- Gateway routers link the edge of an AS to the gateways of other ASs.

Common intra-AS routing protocols are: RIP, EIDRIP, OSPF (essentially identical to IS-IS).

OSPF (Open Shortest Path First) Routing

This is a common and open intra-AS routing protocol.

This is a classic link-state protocol:

- Each router floods OSPF link-state advertisements (directly over IP rather than using TCP/UDP) to all other routers in the entire AS.

- Multiple link costs metrics possible:

- Each router has a full topology, uses Dijkstra’s algorithm to compute the forwarding table.

All OSPF messages are authenticated to prevent malicious intrusion.

Hierarchical OSPF

This allows for a two-level hierarchy with a:

- Backbone

- Localised around boundary routers.

- Local Area

- Include one router from the backbone and a section of the internal network.

nwdiag {

group a {

color = "pink"

b1

a11

a12

a13

}

group b {

color = "lightblue"

b2

a21

a22

a23

}

group c {

color = "lightgreen"

b3

a31

a32

a33

}

internet -- boundary

network backbone {

boundary

b1

b2

b3

}

network area1 {

b1

a11

a12

a13

}

network area2 {

b2

a21

a22

a23

}

network area3 {

b3

a31

a32

a33

}

}

Link state advertisements are flooded only in an area or the backbone. This limits traffic on large networks:

- Each node has a detailed area topology. It only knows the direction to reach other destinations.

Inter-AS Routing (BGP)

In this scenario policy is more important than performance as the data is not internal.

Boarder gateway protocol (BGP) is the de-facto standard inter-domain routing protocol. BGP provides each AS a means to:

- eBGP - Obtain subnet reachability information from neighbouring ASes.

- iBGP - Propagate reachability information to all AS-internal routers.

- Determine good routes to other networks based on reachability information and policy.

nwdiag {

group {

1a

1b

1c

1d

}

group {

2a

2b

2c

2d

}

group {

3a

3b

3c

3d

}

network peering1 {

address = "eBGP"

color = "pink"

1c

2a

}

network peering2{

address = "eBGP"

color = "pink"

2c

3a

}

network AS1 {

address = "iBGP"

color = "lightblue"

1a

1b

1c

1d

}

network AS2 {